Migrating Mastodon storage to S3-compatible

If you're just curious, how to perform the migration, navigate to bottom to the The solution HOWTO section.

This blog entry is written in a way, to be understood by even entry-level admins, who maintain own Mastodon instances. So this time I attached even some screenshots to make things more clear.

The problem

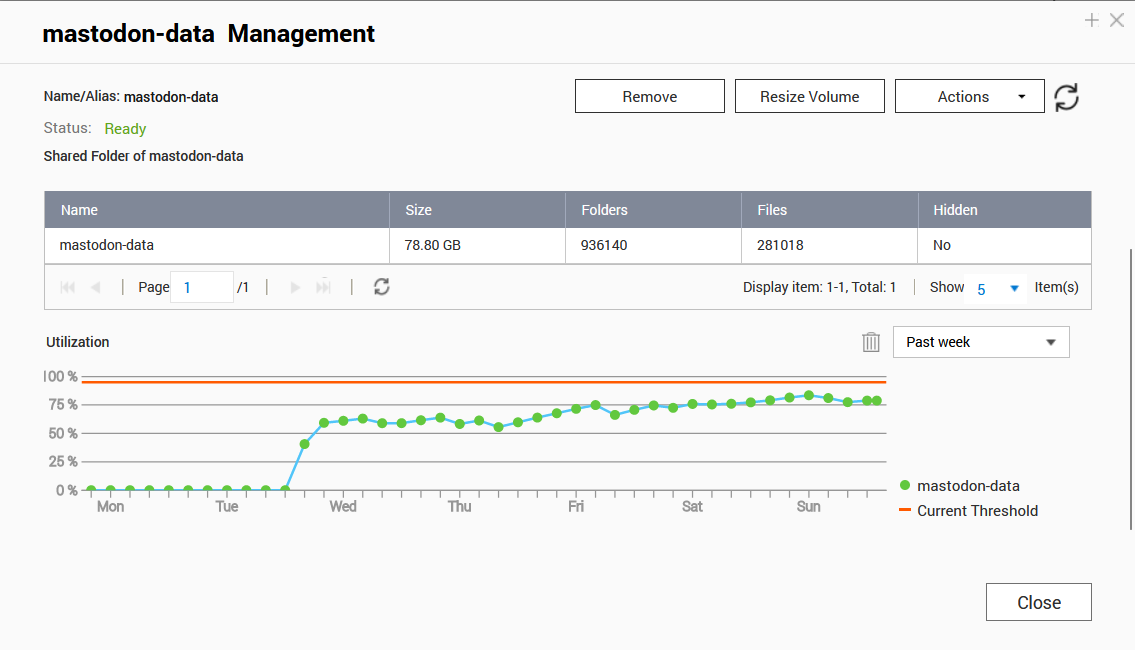

Recently, I enabled a few relays on my Mastodon instance to populate our content in the network. However, after that action, storage usage grew rapidly:

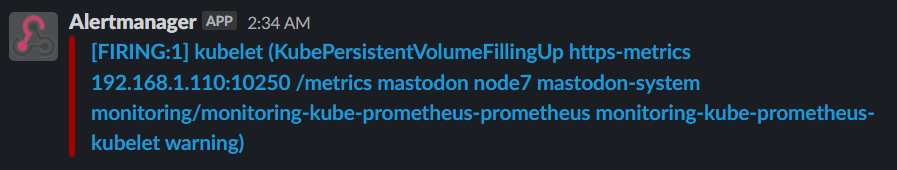

You see, how quick it was? Since then, I have increased the capacity of that disk volume a few times (using QNAP for k8s storage here), but this alert just wouldn't go away:

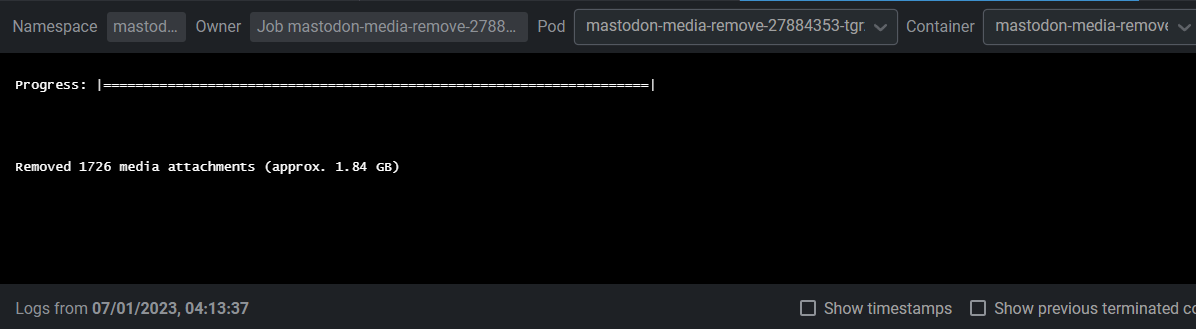

I have the media-remove process enabled, which deletes cache data older than 3 days now. But still - the grow rate is faster than data removal:

Env setup

My Mastodon instance is a Kubernetes deployment done using an upstream helm chart, which I edited a bit to fit my use case. I have disabled using Postgres subchart and deployed it separately using Bitnami postgres-ha helm chart.

The storage is provided by QNAP hardware storage. I have 2x500GB SSDs there in RAID1 and I expose this storage via nfs-subdir-external-provisioner. As I have enabled snapshots for some workloads, in practice I have around 400GB of usable storage. Mastodon storage was quickly raising, almost up to 100GB, which is almost 25% of the whole space. Basically, the cost of hosting Mastodon, from this perspective, grew considerably.

Finding the solution

I thought about a few solutions here:

- Shorten the cache TTL by lowering the number of days in the media-remove job. I have it set to 3 days, but could lower to e.g., 1 day. However, I realized that several tags I follow are not updated that recently. Thus, on my timeline, it's normal, that I see posts from a few days back. So that 3 days seems really reasonable, and possibly, I'm thinking about even elevating this number to e.g., 5 days. So, this approach is not the best course of action for my situation and requirements.

- Resize the volume to e.g. 200GB. This would be far too expensive. This QNAP price (including disks) was around $400. If I sacrifice half of its storage just for Mastodon, the cost would be around $200, plus maintenance, energy etc. Imo, it's way too expensive for this kind of workload (even though it's a one-off cost; it doesn't amortize too well).

- Migrate to secondary QNAP with standard HDDs. I have a secondary device, with higher storage (2x2TB). But this is way slower hardware (same QNAP, but slower disks, normal hard-drives). I use it mainly for storing snapshots, backups and some other, heave, rarely accessed data. So, this wouldn't be the best course of action, as it would introduce a much higher pressure on those drives.

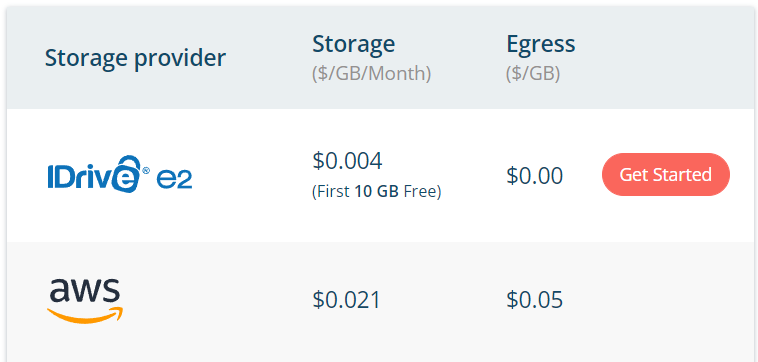

- Migrate to the cloud. Mastodon provides the ability to write/read assets and other static files to/from S3-compatible drive. I have done a research here, and compared 3 providers: AWS S3, Cloudflare R2 and iDrive E2. The last is the winner here - it's the cheapest one, it provides some basic ACLs (public read-only for my use-case) and there are no costs related to data transfers (in and out so ingress and egress).

Exploring iDrive E2

I have found this service just recently, while searching for a possible storage vaults for my QNAP backups/snapshots. I have run a proof-of-concept using it:

- Created a bucket for Mastodon

- Enabled public-read-only permissions (it's possible to upload a custom IAM policy also as it's compatible with S3)

- Integrated it with Cloudflare CDN (by generating Cloudflare TLS certs and integrating DNS subdomains)

- Copied a small set of Mastodon data into this new bucket using aws s3 sync

- Switched Mastodon to read/write into this S3 to confirmed it works properly. It works properly!

So, my estimated costs now (assuming 200GB of storage use) is around.. 200 * $0.004 = $0.8 monthly (minus the first 10GB which is free). So it's less than a dollar per month, making it $9.6 YEARLY for those 200GB. Way, way cheaper, than hosting it on my SSDs.

The solution HOWTO

The process is rather straightforward, just customize to match your environment.

- Register an iDrive E2 account and enable billing by upgrading your plan (whether yearly or monthly). Without a billed account, you won't be able to open the bucket to the world (make it semi-public). Choose a region close to your site.

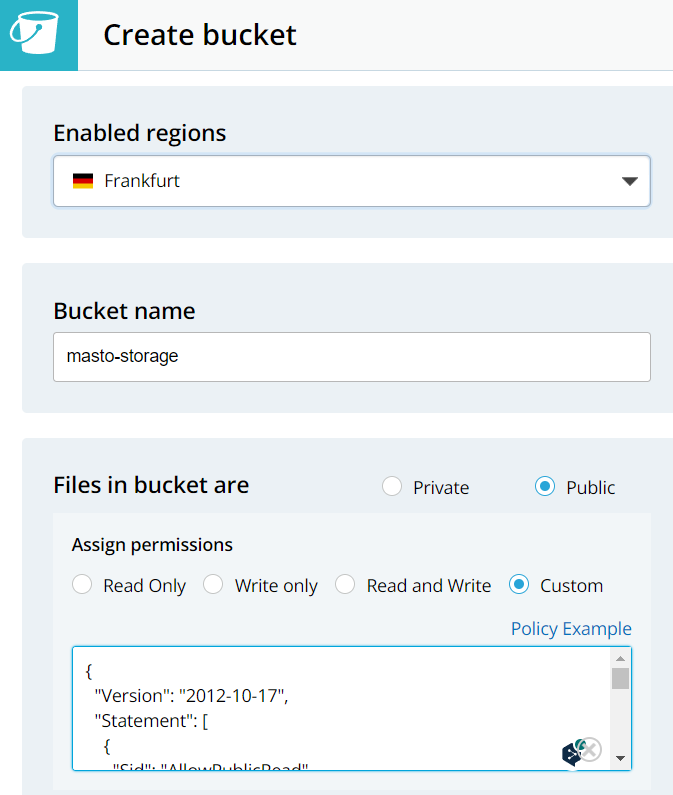

- When it's done, create a new bucket. As you can see, there is an option to enable Public access with read-only setting. IMO, this is not too secure, as it may also provide a user to iterate over files (fetch directory index) and that is not required (and can be dangerous; there is no need for anyone to fetch index of your storage). Thus, its much safer to specify a strict IAM policy, that allows to only fetch a particular file knowing it's URL (and Mastodon knows it):

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "AllowPublicRead",

"Effect": "Allow",

"Principal": {

"AWS": [

"*"

]

},

"Action": [

"s3:GetObject"

],

"Resource": [

"arn:aws:s3:::bucket-name/*"

]

}

]

}

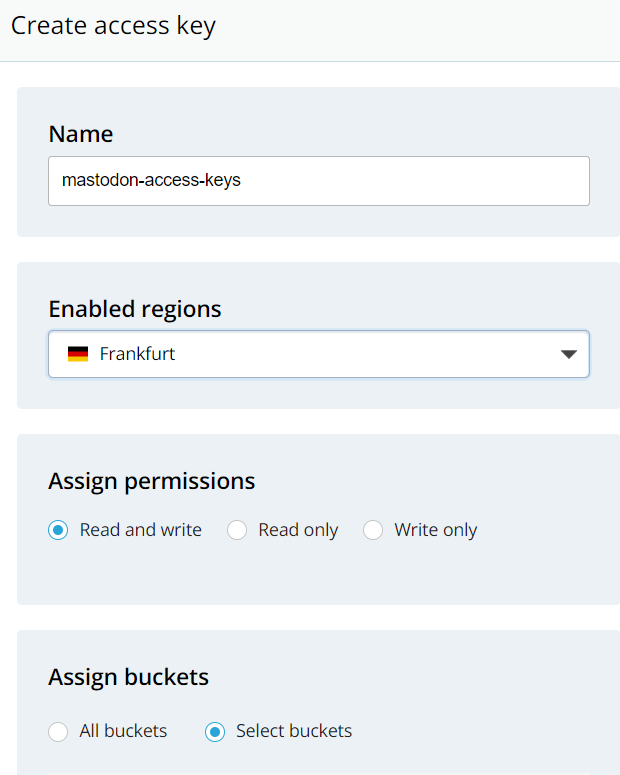

- Generate Access Keys for your account (side menu, Access Keys):

- Now, the easiest way to use those keys is to export it to env vars, e.g.:

export AWS_ACCESS_KEY_ID="<your access key>"

export AWS_SECRET_ACCESS_KEY="<your secret access key>"

- Verify it works properly. Install aws-cli on your terminal

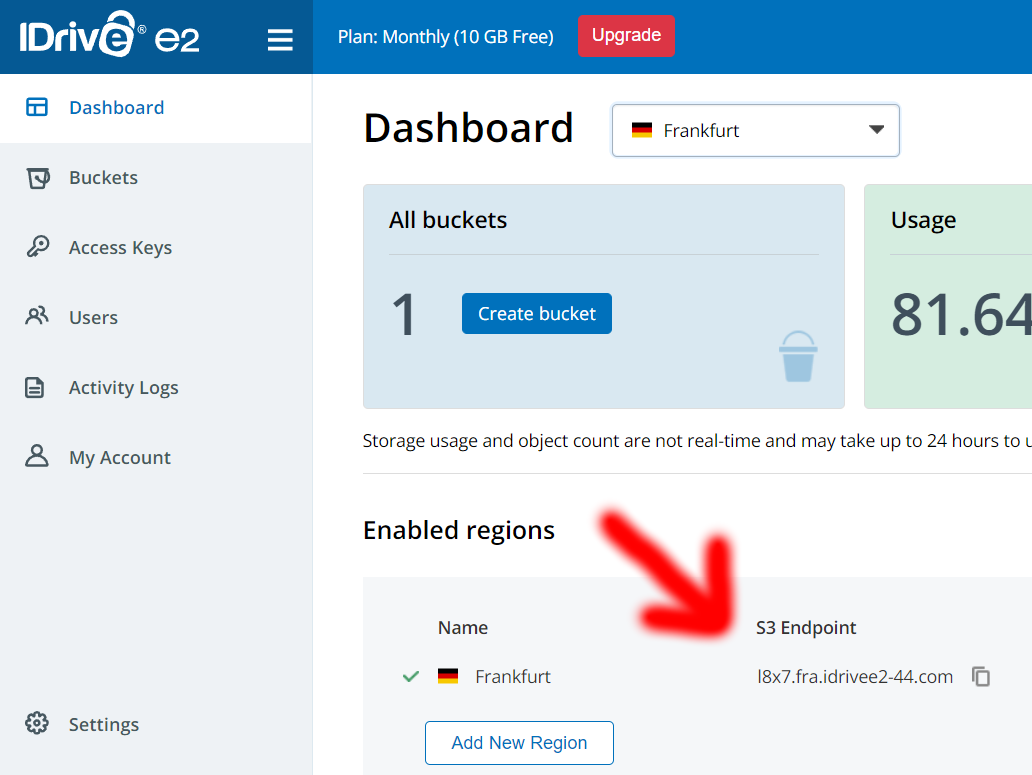

- Having aws-cli installed and security credentials exported to environment variables, you may verify it all works properly. Firt take note of your region endpoint (you can see it in the E2 drive dashboard, assigned to the region you chose):

- Now, you should be able to run the following command. It lists files in your bucket (the list will be probably empty, as you didn't upload there yet anything):

aws s3 --endpoint-url https://<your-endpoint-url> ls s3://<your-bucket-name>/ - Try sending some image file:

aws s3 --endpoint-url https://<your-endpoint-url> cp image.jpg s3://<your-bucket-name>/image.jpg - Now, try accessing this image using private mode of your browser. This way, you will be unauthorized from E2 drive perspective, but as you just made this drive access to specific files allowed publically, it should just work. The url for your file can be built in two ways:

- You may use region endpoint url (the one mentioned earlier). This way, the URL looks like https://

/ /image.jpg - You may also use the bucket endpoint, which looks like https://

. /image.jpg - Both above methods should work just fine at this point.

- You may use region endpoint url (the one mentioned earlier). This way, the URL looks like https://

- Now, if above works fine, the time-consuming part. You'll need to copy your existing data to S3. As in my case, Mastodon is a k8s deployment, I needed to create a pod (that includes aws-cli binary) and attach the Mastodon storage disk. The pod definition was as follows:

apiVersion: v1

kind: Pod

metadata:

name: aws-cli-copy

namespace: mastodon

spec:

volumes:

- name: system-pv

persistentVolumeClaim:

claimName: mastodon-system

- name: assets-pv

persistentVolumeClaim:

claimName: mastodon-assets

containers:

- name: datacopy

image: amazon/aws-cli

env:

# generally it's safer to read secret values from k8s secrets, but for

# one-shot job, and under my infra circumstances, this is fine:

- name: AWS_ACCESS_KEY_ID

value: "<your-access-key-id>"

- name: AWS_SECRET_ACCESS_KEY

value: "<your-secret-access-key>"

command:

- "sleep"

- "36000"

volumeMounts:

- mountPath: "/mnt/mastodon-system"

name: system-pv

- mountPath: "/mnt/mastodon-assets"

name: assets-pv

- Now, after pod was created (

kubectl apply -n mastodon -f pod.yaml) I just entered its terminal (k exec -it -n mastodon aws-cli-copy -- bash) - Now, the data-sync command. From the directory, where all mastodon-system files were stored (in my case /mnt/mastodon-system) I just run a command:

aws s3 --endpoint-url https://<your-endpoint-url> sync . s3://netrunner-masto/ --dryrun- mind the dryrun part. It tells the command to just display, what it would change, instead of sending files. It's better to verify it's doing what we want it to do, before running the actual command. After verification, just remove the dryrun part and run the command again. - After you synchronized those files, now you need to reconfigure Mastodon properly, to make it use this S3-compatible storage. As I use the helm chart, the proper values to change were:

s3:

enabled: true

access_key: "<your-access-key>"

access_secret: "<your-secret-access-key>"

existingSecret: ""

bucket: "<your-bucket-name>"

endpoint: https://<your-region-endpoint-url>

hostname: <your-region-endpoint-url>

region: ""

alias_host: "<your-region-endpoint-url>/<your-region-bucket>"

- It's actually better&safer to put access key/secret into a secret and use existingSecret in the above yaml config.

- If you're not using k8s, but standard VM/bare-metal deployment, this documentation explains which env vars to change in order to enable S3. I believe it would look like:

S3_ENABLED=true

S3_BUCKET=<your-bucket-name>

S3_PROTOCOL=https

S3_HOSTNAME=<your-region-endpoint-url>

S3_ENDPOINT=https://<your-region-endpoint-url>

S3_ALIAS_HOST=<your-region-endpoint-url>/<your-region-bucket>

AWS_ACCESS_KEY_ID=<your-access-key>

AWS_SECRET_ACCESS_KEY=<your-secret-access-key>

- Now, after restarting/redeploying Mastodon, it all should just work.

- One more thing left here. I had this mastodon-assets volume, which I didn't migrate. But assets stored there are still hosted properly, so I believe those are properly provided by the webserver. The thing is that it may be ineffective to host static files via this webserver. However, as I use CDN for caching static content - I don't really care. If this start to be problematic from the performance side, I'll take a closer look into it.

CDN considerations

The above setup has one disadvantage. Having the following setup:

alias_host: <your-region-endpoint-url>/<your-region-bucket>

means, that each time a content is downloaded, it is done directly from the S3-compatible storage. In case of eDrive E2 it doesn't really matter, as there are no fees for egress communication (downloading files from your drive). But if this were AWS S3, it would mean that you pay for that outgoing traffic.

To prevent this, we can use a Content Delivery Network (CDN) which acts as a caching layer in front of this S3-compatible drive. It adds reliability and speeds up content downloading (CDNs have many nodes across the globe, and usually the client is connected to the closest one, meaning, lower roundtrip times).

But this will be a subject of another blog post.